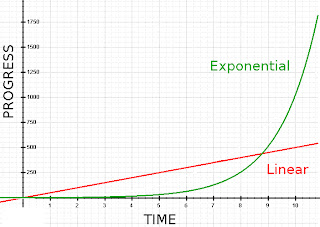

Anyway, Ray Kurzweil has been criticized for making the statement that all technologies are progressing at a exponential rate rather than a linear rate, which leads people to overestimate the progress in the short term and grossly underestimate progress in the long term.

Yet, there is room for criticism because not all technologies are moving at an exponential rate. The progress of curing different cancers, gas mileage for cars, and the comfort of computer chairs hasn't progressed as the speed for decoding the human genome has.

So the criticism is semi-warranted because Kurzweil does overstate his case a little bit, but does it really matter? I don't think so. If only one technology continues to improve at the exponential rate that it has historically been on than the criticism is really just window dressing distracting for the implications that are fun to think about. That technology is, of course, the computer.

So long as the progress of computers is exponential (which it is) the implications will be vast enough to make Kurzweil's predictions for the most part correct. Looking at what 'Watson' has done a couple of weeks ago in understanding human language and being able to answer questions it is impossible not to look into the future and think about the possibilities, some of which were mentioned in my last post.

As Yogi Berra it attributed to saying, 'It is hard to make predictions, especially about the future', but Kurzweil has a good track record and there is really no harm done in being wrong. The people who foresaw the flying car never broke into your house and peed on your rug.

Apart from the flying car, one prediction seems to stand out from the rest, when will humans build something that can be described as having a self? When will something be built and said to be conscious? Well that's a tricky question to answer, for a number of different reasons.

The standard test for telling if an animal has the concept of self is the mirror test, which involves putting an animal in front of a mirror with a dot on their body that they could only view in the mirror. The great apes, dolphins, orcas, elephants and a couple species of birds have all passed the mirror test. To pass the test the dot has to be noticed in the mirror and connected to the body of the thing in front of the mirror, this shows that there is an understanding that, that someone in the mirror is 'me' and they are curious why there is a dot on my back. For control there is also a dot placed in an area of the animal that is out of site when the thing is looking in the mirror, so that it can limit coincidental actions.

This test has be used to show that babies up to 18 months, dogs and cats don't react to the dot in any way and therefore lack the concept of the self, which can be noticed by anyone who has seen very young or handicapped children play 'with the boy/girl in the mirror.'

Well this test wouldn't work at all for computers, because they could be specifically made to pass the mirror test and respond to a dot put on them. It really breaks our standards to think about how to test for consciousness in a machine, which is why people look to the Turing Test as the Mirror test for computers. The test involves being having two chat boxes one being another human and one being a computer program and a person talking to both. The person then has to decide which one is a program and which is a computer, when the two are indistinguishable the computer has passed the test.

But not all people feel that the Turing Test demonstrates much, a few philosophers like John Searle have go so far to say that the Turing test proves nothing about consciousness, or in effect that a talking tree could never be seen as conscious (I don't remember the name of who argued that). I'm not in their line of thinking, but I do feel that some part of the self involves having goals and motivations and a chat program while a program can say it has those things, it lacks the ability to act them out. This is not to say that something that passes the Turing Test isn't conscious, just that I'm not sure that I would be ready to call it conscious yet.

Another problem is that the Turing test isn't looking for consciousness, it's looking for human consciousness, its by definition looking for something that makes human errors and talks like another person would. It's telling when Kurzweil says that the computer will have to dumb itself down to pass the test, because it's not an ideal test of consciousness, and there lies the rub; Nothing is. If the program was elegant enough to throw in a few spelling mistakes, vulgarities and misconceptions it could probably make someone think it was yours truly, but it would have used parlor tricks to do so.

The mirror test doesn't work for programs and the Turing test only works to find something compatible with human understandings, and could be prone to programs taking advantage by making mistakes that people wouldn't think that "computers" would make.

In fact, the whole topic might be a moot point. People subjectively deciding what is and isn't conscious seems like a huge pill of worms. If this surly scientifically done poll done on Just Labradors Forum, with the appropriate measures to account for bias, is correct than 78% of people believe that dogs are self-aware, 21% are undecided and 0% think that they definitely not self aware. People are biased into thinking that biological things have consciousness and that machines don't, and the first conscious machines will be abused due to that fact.

When a machine passes the Turing Test and shows reasonable signs that it is conscious, it would be ethical to just treat it as such seeing how the criteria is so poorly defined and no test seems ideal.

Thanks for reading,

-themoralskeptic