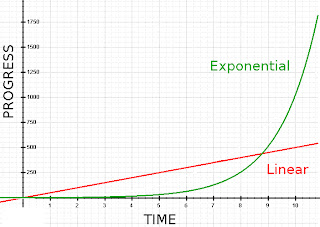

Anyway, Ray Kurzweil has been criticized for making the statement that all technologies are progressing at a exponential rate rather than a linear rate, which leads people to overestimate the progress in the short term and grossly underestimate progress in the long term.

Yet, there is room for criticism because not all technologies are moving at an exponential rate. The progress of curing different cancers, gas mileage for cars, and the comfort of computer chairs hasn't progressed as the speed for decoding the human genome has.

So the criticism is semi-warranted because Kurzweil does overstate his case a little bit, but does it really matter? I don't think so. If only one technology continues to improve at the exponential rate that it has historically been on than the criticism is really just window dressing distracting for the implications that are fun to think about. That technology is, of course, the computer.

So long as the progress of computers is exponential (which it is) the implications will be vast enough to make Kurzweil's predictions for the most part correct. Looking at what 'Watson' has done a couple of weeks ago in understanding human language and being able to answer questions it is impossible not to look into the future and think about the possibilities, some of which were mentioned in my last post.

As Yogi Berra it attributed to saying, 'It is hard to make predictions, especially about the future', but Kurzweil has a good track record and there is really no harm done in being wrong. The people who foresaw the flying car never broke into your house and peed on your rug.

Apart from the flying car, one prediction seems to stand out from the rest, when will humans build something that can be described as having a self? When will something be built and said to be conscious? Well that's a tricky question to answer, for a number of different reasons.

The standard test for telling if an animal has the concept of self is the mirror test, which involves putting an animal in front of a mirror with a dot on their body that they could only view in the mirror. The great apes, dolphins, orcas, elephants and a couple species of birds have all passed the mirror test. To pass the test the dot has to be noticed in the mirror and connected to the body of the thing in front of the mirror, this shows that there is an understanding that, that someone in the mirror is 'me' and they are curious why there is a dot on my back. For control there is also a dot placed in an area of the animal that is out of site when the thing is looking in the mirror, so that it can limit coincidental actions.

This test has be used to show that babies up to 18 months, dogs and cats don't react to the dot in any way and therefore lack the concept of the self, which can be noticed by anyone who has seen very young or handicapped children play 'with the boy/girl in the mirror.'

Well this test wouldn't work at all for computers, because they could be specifically made to pass the mirror test and respond to a dot put on them. It really breaks our standards to think about how to test for consciousness in a machine, which is why people look to the Turing Test as the Mirror test for computers. The test involves being having two chat boxes one being another human and one being a computer program and a person talking to both. The person then has to decide which one is a program and which is a computer, when the two are indistinguishable the computer has passed the test.

But not all people feel that the Turing Test demonstrates much, a few philosophers like John Searle have go so far to say that the Turing test proves nothing about consciousness, or in effect that a talking tree could never be seen as conscious (I don't remember the name of who argued that). I'm not in their line of thinking, but I do feel that some part of the self involves having goals and motivations and a chat program while a program can say it has those things, it lacks the ability to act them out. This is not to say that something that passes the Turing Test isn't conscious, just that I'm not sure that I would be ready to call it conscious yet.

Another problem is that the Turing test isn't looking for consciousness, it's looking for human consciousness, its by definition looking for something that makes human errors and talks like another person would. It's telling when Kurzweil says that the computer will have to dumb itself down to pass the test, because it's not an ideal test of consciousness, and there lies the rub; Nothing is. If the program was elegant enough to throw in a few spelling mistakes, vulgarities and misconceptions it could probably make someone think it was yours truly, but it would have used parlor tricks to do so.

The mirror test doesn't work for programs and the Turing test only works to find something compatible with human understandings, and could be prone to programs taking advantage by making mistakes that people wouldn't think that "computers" would make.

In fact, the whole topic might be a moot point. People subjectively deciding what is and isn't conscious seems like a huge pill of worms. If this surly scientifically done poll done on Just Labradors Forum, with the appropriate measures to account for bias, is correct than 78% of people believe that dogs are self-aware, 21% are undecided and 0% think that they definitely not self aware. People are biased into thinking that biological things have consciousness and that machines don't, and the first conscious machines will be abused due to that fact.

When a machine passes the Turing Test and shows reasonable signs that it is conscious, it would be ethical to just treat it as such seeing how the criteria is so poorly defined and no test seems ideal.

Thanks for reading,

-themoralskeptic

Very interesting article. I am not convinced that seeing a dot in a mirror and realizing it is on oneself proves a concept of self. It proves that a concept of reflection and the thing recognizing may recognize that the dot is on its body. If a dog looks down and sees a leaf on its paw, it sniffs the leaf. That does not mean it knows that the leave is on "its" body, per se, but if it does then reflection is not needed for a concept of self. A leaf and a paw is all that's needed.

ReplyDeleteGood post. I have read about the Turing test and I'm not sure if I buy into it. Like you said, judging what is conscious and what isn't seems all very subjective, and this is an excellent point...

ReplyDeleteHaving a "judge" on consciousness seems very moot indeed, since it is impossible, a paradox, to know or experience someone else's subjectivity. The fact that a computer can fool a human into thinking it is another human is merely proof of a program effective at such a task. Nothing else.

I've always believed that the human mind is inexorably linked to the human body. Our brain developed over time as an organ which has a role in keeping us alive and ensuring our reproduction. The mind can be seen as an autonomous abstraction of the brain, but it can never fully detach itself from its link to the "real" world, the physical world as experienced through the body posited in time and space.

Unlike a conscious being, a computer isn't born out of a will to live; it is constructed by other living forms. Its role, its identity, will always be supplanted by the being that created it (in our case, humans).

To be considered equal, it would have to break away from the programming it was originally given by its creator. A computer passing the Turing test is merely following its orders. The true test is beyond; what will the computer do next? It has to decide for itself through its own subjective experience, it's own genius. Not that of its creator. Only then will I accept that a computer is "like" a human.

Whether anyone wants to interpret this theologically is up to them... :)

btw Steve, that was me who just posted. I selected the wrong profile. I promise to update my blog again... just hard to find the time.

ReplyDeleteAlso, I posted on one of your older entries, the Atheist and 2 funerals.

Well, I appreciate the comments.

ReplyDeleteFirst off, I'm not saying that someone/thing has to see itself in the mirror to have an understanding of being a 'self', as I said a computer doing that proves nothing at all except that it can detect things around it.

It does mean a lot more for animals though, the fact that animals generally give the impression that they are seeing another member of there species clearly shows that they lack the ability to connect themselves to the image in the mirror.

I think the leaf is a poor example. Take for instance a shark that allows a cleaner fish to eat the parasites from itself. This shark can feel another fish around it's body and even can tell that it's not supposed to eat it. It has made a connection of having feeling in different parts of its body and knowing what its body is, but having reactions about a leaf or knowing that something is around your body doesn't imply a concept of going further and knowing, 'Hey that's me!'.

Reflection is needed, as the persistent self doesn't exist as a reactionary moment to moment. No one is arguing that animals don't know they have a body, they are saying they don't have the self as a top down control mechanism.

If anyone has any other test for animal consciousness let me know.

Second, I agree with about 98% of what you said Andrew, but I do have one issue.

ReplyDelete"To be considered equal, it would have to break away from the programming it was originally given by its creator. A computer passing the Turing test is merely following its orders."

I'm not sure I agree with that, a computer cannot be programed with the specific instructions to 'act like a human', it has to be novel enough to respond to novel questions, no matter the subject. It's programming has to be loose enough to be able to seem real, a brute force answer can't be made to every question a person would ask and the context and previous questions also have to be weighed, it would make it impossible to just program a computer to be a person.

The only way to do it would be to give a program a human information base and work from there, perhaps programming in a personality, perhaps not.

To be considered an equal it will have to be superior to us, to overcompensate for the inherent biases of looking at a computer as a conscious self.

Cool. Is that like a JungleGym of psychosomatic skepticism? Tell me more. I'm intrigued.

ReplyDeleteI never said that a computer program that passes the Turing test needs to be programmed to "be" a person. It merely needs to be programmed to "act" like a person, to put on a performance.

ReplyDeleteWhat I was trying to say was that no matter how convincing or uncanny of a performance a computer does during a Turing test is only evidence of the what the test attempts to prove: that a human can be fooled about conscious identity via a computer screen. The computer would have to go much further than to fool one human about his identity, he would have to, for a lack of better words, fool himself. Long after the Turing exercise, if the computer learns from his experiences and starts asking itself questions like "What am I doing here? How did I get here? I'm human aren't I? Or what am I?", and begins to autonomously prepare himself in the anticipation of future experiences, then he isn't yet conscious. If the computer needs a framing purpose; such as someone inputing information (the judge during the turing test, for example) in order to have "brain" activity, then it is merely responding to a human's input, not creating his own. He is still a program, and not an independent consciousness.

Only once he moves past the Turing test, once he reflects on his experiences and creates a personal identity out of them, can a computer truly be called "equal" in my standards...

Funny way of putting it...such a good comment that that's my next post.

ReplyDeletemersin

ReplyDeletenevşehir

uşak

ataşehir

küçükçekmece

ON1